HTML clipboardAutomatic Testing

Automated test generation consists of computing a set of

experiments/scenarios/test cases which can be used to determine wheter the

implementation behaves correctly. Test execution consists of applying

the experiment to the implementation, i.e. supplying inputs and checking the

outputs. Ideally, testing is completely automatic after the specification has

been given.

The DSS unit has a broad expertise in specification, analysis,

construction of real-time systems. We are therefore emphasizing the specific

real-time problems ranging from analysis of formal specifications to realisation

of systems using hardware and real-ime operating systems. Kim's expertize is on

algorithms and tools for analysis of real-time systems. Brian's is on real-time

testing (phD Thesis on the subject) and construction of real-time systems.

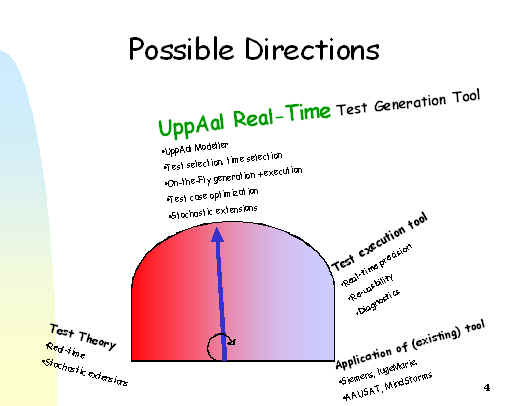

Within the context of automated testing we offer a wide spectrum

of possible projects, ranging from the highly theoretical to the highly

practical and constructive. Also a single project may consist of ingredients

from each aspect.

-

Construction of a real-time test generation

tool based on UppAal

Real-time tests must define precisely when an input to the system should be

generated and when a response should be expected. The time requirements will

be given as timed automata (formal model of real-time UML) specifications.

The UppAal verfication engine and underlying algorithms and datastructures

(zones) can also be adapted to generate test cases

-

A promising approach to test large systems effectively

is to use on-the-fly testing. Here the IUT and Uppaal are interconnected

(output events from Uppaal are connected to input events of the impl,

and vice versa, and the timed automata model is executed/simulated in

REAL-TIME to produce input events (event type and time where event

should be supplied) for the impl and compute "expectancies" for outputs

(event type and deadline where event must be received). This procedure

is repeated an extreme number of times to produce test sequences of

literally 100.000 + events. It is a thesis that this approach will be

able to handle very large specifications (avoid state space explosion)

and achieve good coverage. There are some indications that this approach

is a HIGHLY EFFECTIVE Stress testing technique.

-

Test generation strategies. Intuitively, the more

optimal test cases wrt. e.g. fewest possible, fastest total execution

time, minimal cost, highest coverage,, etc that are to be generated,

the more computations and computation time is required apriory by the

generation tool. But other times it is more important to handle very

large specs or generate test events very fast. Several compromises

between computing test events fast and computing optimal test sequences

exists.

-

Post-mortem analysis: In one extreme one may simply

blindly or randomly generate an input sequence without having the

faintest idea of how the impl should react. The sequence is executed

and the outputs from the impl is logged. After execution it is

checked against the spec wheter the impl responeded correctly.

-

On-the-fly: The specification is

interpreted/executed dynamically and valid test sequence are

computed.

-

On-the-fly with precomputed data structures: To

optimise speed, many manipulations of the specification have been

performed before test generation starts to produce data structures

containing answers/results/information that will be needed during

execution.

-

An optimal test suite is produced befor execution of

any test case starts.

-

What kind of TA specifications should be accepted?

Open/closed specifications? Modeling of environment assumptions? How

are test cases generated? How can the UppAal Engine be adapted to test

generation? How to select test cases? How to select the time instances

where inputs should be delivered and outputs expected.

-

A Real-Time Test Executive

Automated test execution requires that a test computer (or test software)

can communicate with the implementation under test, send and receive byte

sequences representing abstract events. A main problem in testing of hard

real-time systems is the timeliness of the test execution. The communication

delay from the test interpreter until the event is delivered at the

implementation interface (and vice versa) must be bounded, and preferebly

low overhead. This uncertainty must be measured. Also the events must be

precisely timestamped and logged, and the timestamps must be relayed to the

test interpreter/generator to ensure that correct verdicts are given, and

that generation of future event predictions are precise and valid. This

requires real-time support from the OS (predictable scheduling, memory

locking, ...) How good can this be done on of-the-shelf OS's like (win2k,

Solaris, ) and on specialised Real-Time Operating Systems.

Another main problem is that instrumenting the implementation and developing

the required communication are very time consuming. Therefore we seek an

efficient, general, reusable layered arcitecture based tool for test

execution. Other problems includes:

-

real-time testing: How to execute the test in a timely

precise manner? Implementation on a RT-OS.

-

pseudo real-time testing: Sometimes real-time takes too

long. How can (faithful) time leaps be added to allow for faster test

execution?

-

greybox testing: How to interrogate the implementation

about state information that can help the test tool/engineerer.

-

generation of diagnostic information: A fail verdict

alone is not very helpful. Why did the implementation fail? Where? Why?

Reprodicability?

-

Application of automated testing

A final option is to take an existing test generation tool (E.g. TorX

developed at Twente) and apply it to an industrial application to evaluate

the effectiveness and limitations of the tool. If you choose this direction

you will work with a very interesting application domain. The scientific

purpose is twofold: 1) It will demonstrate the effectiveness of automated

testing to industrial partners, and 2) it will generate ideas for new

desired functionalities, improvements, methods of circumventing its

limitations. Applications could be

-

AAU-Student Sattelite (a sattelite to be constructed

essentially by students only)

-

Advanced communication protocols (e.g Siemens Mobile

Phones)

-

Control software in "LugeMarie"

-

Lego Mindstorm robots

-

Probablistic testing at Lyngsų Industries.

-

-

Development of a real-time testing theory

An implementation relation is the correctness criterion used in automated

testing defining what it means for an implementation to be correct with

respect to a given specification. It is usually modelled formally as a

mathematically defined relation between two formal objects like two labeled

transistion system. It describes precisely what behaviors of the

implementation are acceptable compared to a given specification. A good

implementation relation is very important in practice because we neither

wish to accept an implementation which does not really work, nor rejct an

implementation that actually works. Formulation of an implementation

relation cannot be done without a glimse at practical considerations about

observability of the implementation and test execution realities: for

instance, we may not be able to meassure precisely at what time instant an

event occured but only within some bounds. Clearly, a theory requiring exact

timing is imperfect. An implementation relation for real-time systems do not

exist.

Active Topics

Active Topics  Memberlist

Memberlist  Calendar

Calendar  Search

Search

Active Topics

Active Topics  Memberlist

Memberlist  Calendar

Calendar  Search

Search

Topic: Automatic Testing

Topic: Automatic Testing IP Logged

IP Logged

Posted: 14Sep2009 at 12:28am

Posted: 14Sep2009 at 12:28am IP Logged

IP Logged